October is AAC awareness month. The acronym AAC stands for Augmentative and Alternative Communication, and according to the American Speech-Language-Hearing Association website “. . . includes all forms of communication (other than oral speech) that are used to express thoughts, needs, wants, and ideas. We all use AAC when we make facial expressions or gestures, use symbols or pictures, or write. People with severe speech or language problems rely on AAC to supplement existing speech or replace speech that is not functional. Special augmentative aids, such as picture and symbol communication boards and electronic devices, are available to help people express themselves. This may increase social interaction, school performance, and feelings of self-worth.”

October is AAC awareness month. The acronym AAC stands for Augmentative and Alternative Communication, and according to the American Speech-Language-Hearing Association website “. . . includes all forms of communication (other than oral speech) that are used to express thoughts, needs, wants, and ideas. We all use AAC when we make facial expressions or gestures, use symbols or pictures, or write. People with severe speech or language problems rely on AAC to supplement existing speech or replace speech that is not functional. Special augmentative aids, such as picture and symbol communication boards and electronic devices, are available to help people express themselves. This may increase social interaction, school performance, and feelings of self-worth.”

Our family has been utilizing various speech-generating devices since June 2009, shortly after my twins received their autism diagnosis at age two, and in public school since they entered the classroom at age three. Almost six years later, I can hardly believe how fast the time has flown by. This can make it challenging to ensure they continue to progress and build language and communication skills in the school setting with appropriate IEP goals, staff training and support. The fact that technology has evolved so much since we started is exciting, especially compared to the methods we were using just a few short years ago.

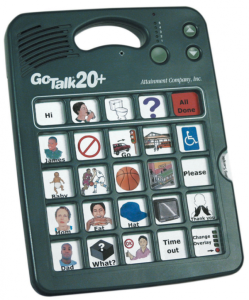

In the fall of 2009, after weeks of training in the language lab at Georgia State University, it was time for my twins to bring home their GoTalk!20+ speech device so we could integrate it into our daily routine. I still remember sitting down at our tiny table with this large green plastic unit and R pressing the center square (“Cheese!”), running into the kitchen, pulling out a large Costco brick of cheese singles, and plopping it down on the table excitedly. I was elated — while we had been working on exercises at the training sessions for the toddler language study, we had to use certain words, but this was spontaneous. He was communicating with me, and the moment was one I had waited for 2½ years. Happiness thankfully overshadowed the fact that the voice coming from the GoTalk was my own. The program coordinator suggested that I find a little boy to record the words, but who was I going to ask? “Um, hi there, parent in R and K ‘s inclusion preschool. Would you mind if I borrowed your child to record the speech device buttons for my little boys who can’t speak? It’s only 100 words (times two mind you).” I had a tough time imagining even having that awkward conversation, let alone having to hear another child’s voice repeatedly asking for cheese and french fries. It just seemed so . . . wrong. I recorded each word as clearly as possible, like I was doing a voiceover for a kid’s public television show. Go, Stop, Help Me, More, Bathroom — these were the top row.

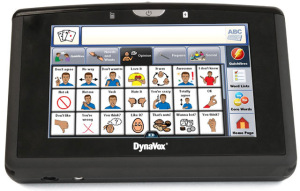

It has been over four years since they graduated to a Dynavox speech-generating device. Now we were in the big time! That was when we first met Kenny, a computer-generated child voice created for speech devices. It is somewhere between a boy and a girl who has sucked up part of a helium balloon. He also often mispronounces words in that charming childish way, but to correct him you have to go in the settings to reprogram how the word is spelled to get the proper pronunciation. I was relieved to meet Kenny after over a year since the boys figured out how to unlock the recording feature on the GoTalk to secretly record my conversations (for about 10 seconds) or some odd noise in the environment they found entertaining.

Now we have Proloquo2Go on the iPad. The software upgrades for the voices have really evolved. While writing this piece, I found a great post on the Assistiveware website about the story behind creating the first text-to-speech voices created for and by children. Josh and Ella, the new American voices are real children that spent several days recording the speech used in this app. It was a surreal experience to see the child who is the voice for my own boys being interviewed.

I am amazed by technology and how far we have come from the days of “talkers” as they refer to them in public school. These devices and apps are expensive, but realize that 2,500 hours of work went in to creating a natural human voice in order to truly include our children without them sounding like a Speak & Spell. The other part of the equation is ensuring that everyone in the child’s environment incorporates it into their exchanges. We have had instances where the device was put away because, “He kept pressing the same button over and over;” “We went outside (without it) and he kept hanging on me, and I finally got him to stop;” or put in front of the classroom and not at the desk. The device is not a machine that the verbal person directs the user to answer a question on. It takes a lot of conscious effort to respond to a child on their device while speaking. This demonstrates that it is important and is not just a chore or demand. Sure, with a little time you can learn how to navigate the screens and how to technically manage it. Humanizing a touch screen tablet with stick figure symbols and reminding yourself that you are effectively teaching and learning a new language is another thing entirely.

Just for a minute, imagine how it would be if you had to plan your thoughts, locate the words, navigate through multiple screens, then touch a bar to read the sentence aloud. I am amazed that my kids can do this, but not surprised that their communication skills are not where I would like them to be, because it is a lot of work. You really have to be motivated and have the motor planning skills. Other people in their environment need to set the example and interact with them in the same way, just like teaching a baby to speak by demonstrating the sounds for them.

Back to the cheese. Food is a great motivator and is how my boys learned to use AAC to request cookies and raisins in a therapy room. Even though the voice wasn’t their own, the fact that they were interacting in a meaningful way for the first time was the first game-changer for me. Six months of speech therapy had yielded nothing, and their motor planning wasn’t strong enough for them to learn sign language beyond “please” and “more.” If it weren’t for all of the research (thank you Georgia State Toddler Language Project), innovation (Acapela group, Mayer-Johnson , and Assistiveware to name a few), and hard work of so many people in our community, I would have never believed my sons had the potential. They were 25 months at official diagnosis of autism with the expressive language of 0-3 months and receptive language of 6 months. They started with an eight-spot device. Six years later they are using an expanded vocabulary, typing in Angry Birds as a request. Inclusion is more than just putting kids of different abilities in the same space and hoping they will learn from each other. It is creating a dialogue, facilitating interaction, and showing the child that they matter, that they have a voice. I am so grateful that this voice no longer sounds like a robot; being different is already hard enough.

One thing I cannot stress enough is how important it is to do the best you can as a parent to remain involved in the training process, not only for yourself and other family members, but to make sure that everyone across all environments at school has support and training as well. Part of awareness for me is learning that just because someone says they “know how to use AAC” that may not be enough. It involves so much more than just turning on a device, starting up an app or familiarizing yourself with the locations of the core vocabulary. If you request an Assistive Technology assessment through your child’s school, be sure that you also include teacher and staff training and support in your IEP document when a device is selected. A great resource for school training is this website. My personal favorite is PrAActical AAC. If you are able, bring your child’s private SLP to the meeting to assist in writing goals and to help coordinate; ours was the most effective team member we had in getting necessary training in place. Implementation planning is essential to ensure that programming is personalized with vocabulary that is relevant to your child as well as making sure that it is constantly updated. My twins’ school uses the News2You picture-based curriculum in the classroom, as well as the Unique Learning System. Our next step is transitioning to the Dynavox Compass on the T-10, but it also can be purchased as a stand-alone app.

Seeing how far speech-generating devices and apps have come in the last four years since our first Dynavox V, I am sure they will only become more accessible as they get better and more affordable — especially if more parents are able to help their children maximize the technology (and their own) potential.

~ Karma

Karma is a mom to four kids that spend way too much time navigating their dirty SUV through the urban sprawl that is metro Atlanta. She proudly supports Generation Rescue in the role of parent mentor for their Family Grant program. When asked, “How do you do it?” the answer is simple: coffee, Belgian ale, and an odd sense of humor. She is a co-author of Evolution Of A Revolution: From Hope To Healing and is a member of TEAM TMR, a not-for-profit organization created by the founders of The Thinking Moms’ Revolution. For more by Karma, <a>CLICK HERE</a>.

I have a dyanox it’s broken but i whats to know how can i get it fix or how can i get a up to date model for a non-verbal seventeen youngman

Hi my son has the Dynavox V, its weight is ridiculous. Do you know if I could turn this one infor s smaller device? He is seventeen and non verbal. Please respond.

Carl and Sandra